AI to protect you

from voice fraud and scams

We develop cutting-edge technology to help you trust what you hear

Listen to this ...

It's not, it was

The rising threat of voice fraud

Generative AI has turbocharged voice-related fraud. And it has just started.

Identity theft, media deepfakes, robocalling... taken to the next level.

AI: Voice cloning tech emerges in Sudan civil war

A campaign using artificial intelligence to impersonate Omar al-Bashir, the former leader of Sudan, has received hundreds of thousands of views on TikTok, adding online confusion to a country torn apart by civil war.

forbes.com › news

How AI Is Changing Social Engineering Forever

On the one hand, AI creators have facilitated incredibly fast adoption by equipping advanced AI chatbots (like Bard and ChatGPT) with the simplest consumer user interface known: a text window to query the machine with promp...

forbes.com › news

How voice cloning through artificial intelligence is being used for scams | Explained

Voice clone fraud has been on the rise in India. A report published in May last year revealed that 47% of surveyed Indians have either been a victim or knew someone who had fallen prey to an AI generated voice scam.

timesofindia.com › articles

About 83% Indians have lost money in AI voice scams: Report

According to a report by McAfee, more than half (69%) of Indians think they don’t know or cannot tell the difference between an AI voice and real voice. Furthermore, about half (47%) of Indian adults have experienced or...

pcmag.com › news

Hacker Deepfakes Employee's Voice in Phone Call to Breach IT Company

Retool says social engineering, an AI deepfake, and a weakness in Google's Authenticator app helped the hacker breach the company last month...

cbs.com › news

Scammers use AI to mimic voices of loved ones in distress

Artificial intelligence is making phone scams more sophisticated — and more believable. Scam artists are now using the technology to clone voices, including those of friends and family....

wired.com › story

Deepfake Audio Is a Political Nightmare

British fact-checkers are racing to debunk a suspicious audio recording of UK opposition leader Keir Starmer.

No one wants to live in a world where this can happen. It's time to use AI to combat AI.

We are revolutionizing voice security with advanced AI

We build solutions powered by deep learning for authenticity and identity verification

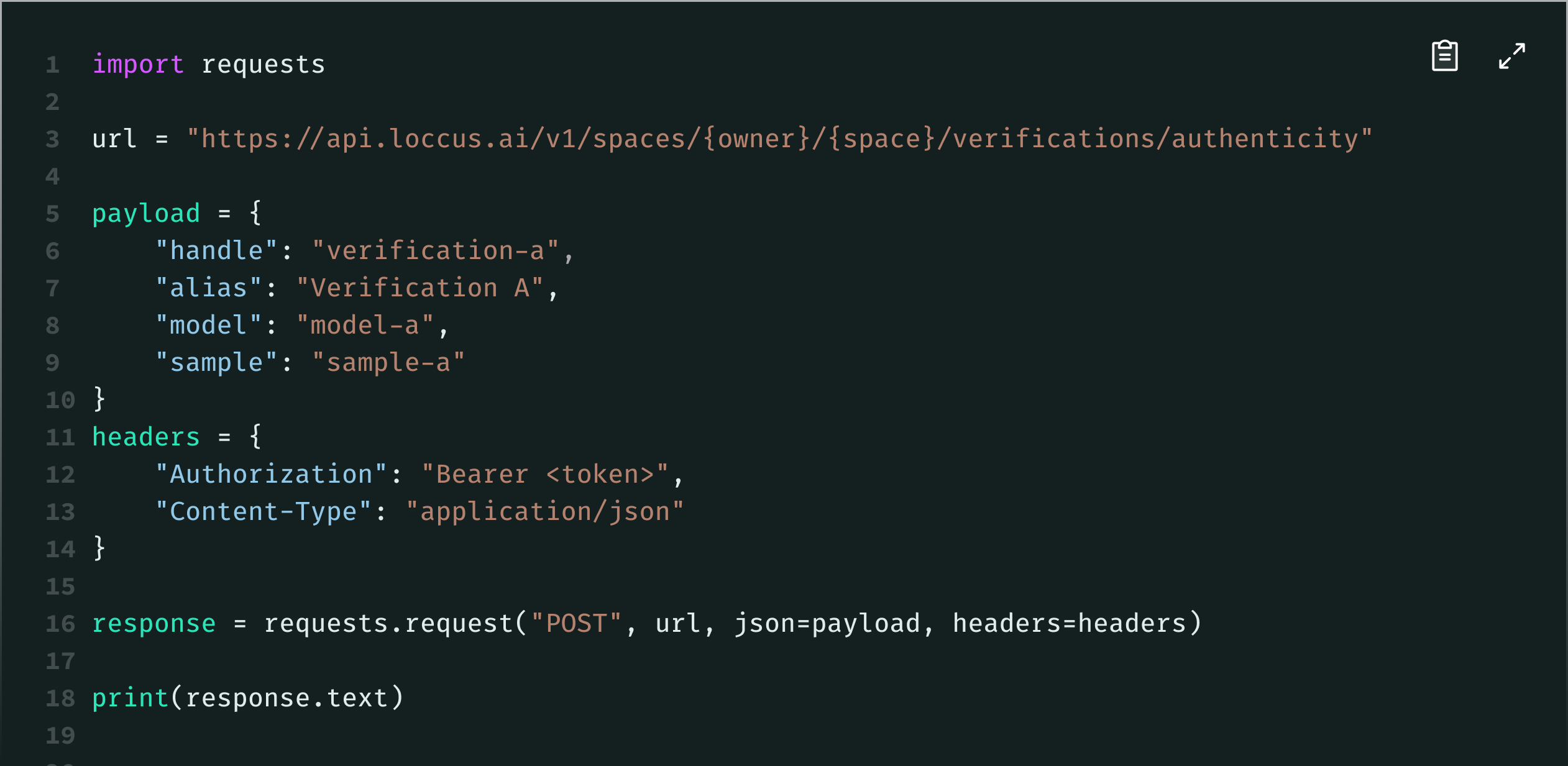

Authenticity verification

Detect if a voice is real or generated with AI.

Identity verification

Verify someone's identity using their voice.

Superior accuracy

and flexibility

Voice is everything we do. We bet for depth instead of breadth.

Our research team has tens of years of experience in the speech and signal processing space, allowing us to find unique features and insights in the voice spectrum.

It's this deep understanding of voice that allows us to build superior products regardless of the audio channel and language we are working on.

Accuracy

Over 98% accuracy vs. the most complex "In-the-Wild" datasets, setting the industry benchmark.

Speed

Verifications in fractions of a second, fit for real-time applications.

Flexibility

Language and channel independent, including the most challenging low-quality audio.